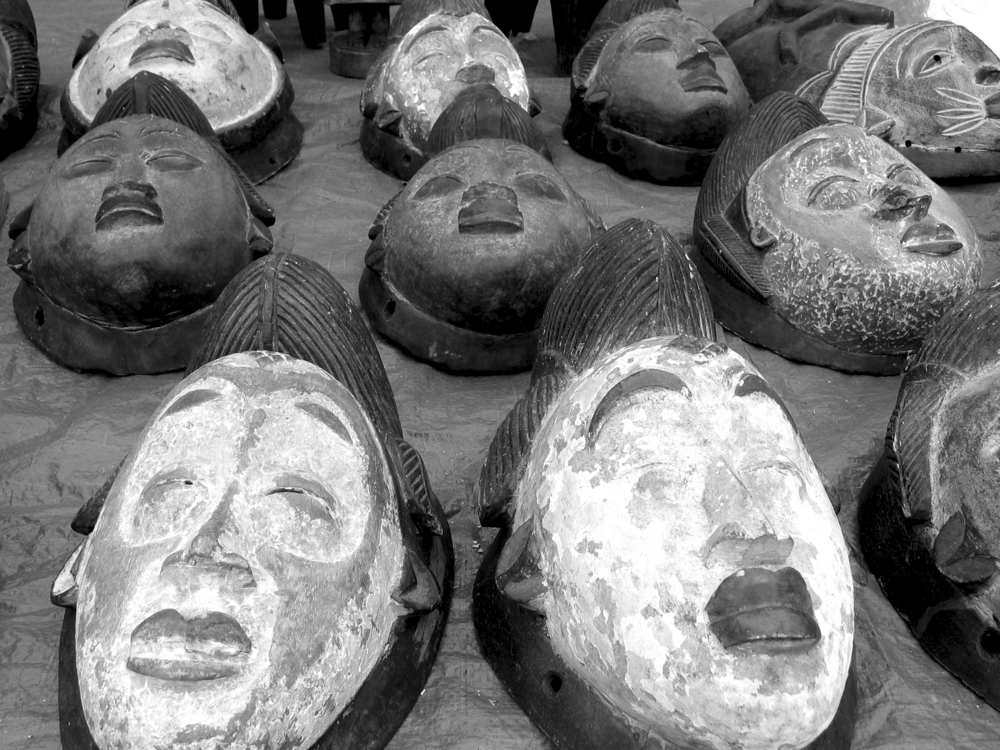

A recent review of the field of facial coding, including the use of artificial intelligence to predict emotional states, concludes that there is little scientific evidence that you can infer how someone feels based on their facial movements.

Companies such as Microsoft, IBM and Amazon, as well as governments including China, are investing in and developing “emotion recognition” algorithms for a variety of tasks. Such algorithms are based on an assumption of universal facial micro-expressions. They assume that if someone has a furrowed brow and pursed lips then they are angry, and if their eyes are wide, eyebrows raised and mouth stretched then they are afraid.

The evidence of thousands of studies reviewed show that there is simply no evidence to support these underlying assumptions. Lisa Feldman Barrett, one of the review’s authors, says, “… detect[ing] a scowl … [is] not the same thing as detecting anger”. The five authors of the study, all from different backgrounds, reached a clear consensus after reviewing more than 1,000 studies over more than two years.

The overall findings are that emotions are expressed in a variety of different ways, which makes it very difficult to infer how someone feels based only their facial expressions. For example, on average people scowl only 30% of the time when they are angry, making a scowl one of many expressions of anger, but not theexpression of anger. More than 70% of the time people do not scowl when they are angry. More importantly, sometimes people scowl when they are not angry.

This implies that using AI to evaluate emotions is highly misleading, especially in situations like a court of law, hiring an employee, walking through immigration or diagnosing a patient. Would any of us accept an accuracy of 30%? This is true for market research too, where automated tools for measuring facial expression are increasingly used, often based on limited databases (by number and cultural scope).

This is not to deny that common facial expressions exist, and that there is powerful common belief that they have meaning, but the reality is that when we evaluate people in real life we are evaluating much more than their facial movements, and especially the context of their emotions.

The role of context is one of the important methodological flaws in many studies of emotion, as well as using “posed” expressions which may not reflect a natural expression and also the use of a limited lexicon of choices that constrains studies to fit within a pre-specified framework of which emotions are important. The accuracy of emotion labels depends on the emotion from happiness at just under 50% (remarkable given that it is the only positive emotion used in many studies) to disgust with an accuracy of under 25%.

In summary, it is not (yet) possible to “fingerprint” emotions through facial expressions. Emotions are more complex than moving a muscle here or there on the face, and there are no such things as universal emotional states. Emotional categories are varied, complex, situational and cultural, and this needs to be reflected in studies of emotion, including market research studies.

You can find the full paper here and a shorter summary of the implications for AI here. You can also find a recent article on recent developments in emotion research here.

REFERENCES

“Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements” by Barrett, Adeolphs, Marsella, Martinez & Pollack, published in Psychological Science in the Public Interest, 2019, Vol 20 (1), 1-68.

“AI ‘emotion recognition’ can’t be trusted” by James Vincent, The Verge, 25 July 2019